June 3, 2025

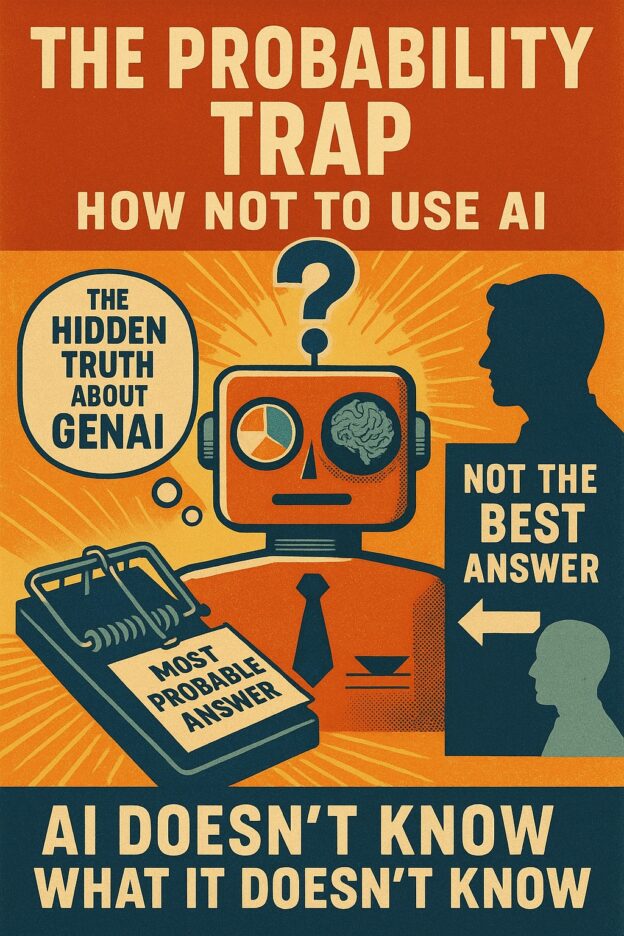

The hidden truth about GenAI

When you ask a large language model a question, it doesn’t think, it calculates probabilities. It scans its training data and returns the statistically most likely response based on patterns it has learned. This fundamental architecture creates a profound blind spot that most users don’t recognize: the most probable answer is often not the best answer.

Consider asking an AI about entering a new market. The most probable response will synthesize conventional wisdom, established frameworks, and common approaches, because that’s what appears most frequently in business literature. But the breakthrough strategy might be contrarian, context-specific, or require the kind of intuitive leap that comes from years of experience in similar situations. The AI will confidently deliver the “textbook” answer while missing the unconventional insight that could make the difference.

This isn’t a bug, it’s how these models are designed. They excel at finding the statistical center of human knowledge, but innovation and wisdom often live at the edges.

The Probability Trap

The probability-based nature of AI creates several systematic biases:

Consensus bias: AI gravitates toward widely-held views, even when they’re outdated or context-inappropriate. If most sources say “the best practice is X,” the AI will recommend X, regardless of whether your specific situation calls for something different.

Recency weighting: Training data cutoffs mean AI might miss the latest developments, but more subtly, it may overweight recent trends that aren’t yet proven while underweighting time-tested wisdom that appears less frequently in recent sources. This is exacerbated through the exponential increase in data creation over time.

Confidence without conviction: AI delivers probabilistic answers with apparent certainty. It can’t distinguish between “this is statistically common” and “this is definitely right for your situation.”

Missing the meta-context: Humans instinctively adjust their thinking based on stakes, timing, relationships, and unspoken constraints. AI processes the explicit question but often misses the real question underneath.

The Human Advantage: Thinking Beyond Probability

Human cognition works fundamentally differently. We don’t just pattern-match, we:

Reason from principles: When faced with novel situations, humans can work from first principles rather than relying solely on historical patterns. We can ask “what should work here?” rather than just “what has worked before?”

Apply contextual judgment: Humans excel at reading between the lines, understanding stakeholder dynamics, and recognizing when standard approaches won’t work because of unique circumstances.

Integrate experiential wisdom: Professional intuition, that “gut feeling” developed through years of experience, often leads to insights that no amount of data synthesis can provide.

Navigate ambiguity and paradox: Real-world problems often require holding contradictory ideas in tension. Humans can embrace this complexity while AI tends to resolve it toward statistical norms.

Think counterfactually: We naturally consider “what if” scenarios and second-order effects that may not appear explicitly in training data.

The AI Advantage: Synthesis and Scale

AI brings complementary strengths that humans struggle to match:

Comprehensive synthesis: AI can simultaneously draw from thousands of sources, identifying patterns across disciplines and domains that no human could process.

Unbiased aggregation: While AI has systematic biases, it’s free from many human cognitive biases like confirmation bias, availability heuristic, or motivated reasoning.

Consistent application: AI applies the same analytical rigor regardless of mood, fatigue, or personal investment in outcomes.

Speed and iteration: AI can rapidly explore multiple angles and approaches, generating diverse perspectives for human consideration.

A New Framework for Human-AI Collaboration

Understanding these complementary strengths suggests a more sophisticated approach to AI collaboration:

Start with AI for breadth, finish with humans for depth. Then iterate.

Use AI to map the landscape—gather relevant information, identify common approaches, and surface considerations you might have missed. Then apply human judgment to determine what’s most relevant to your specific context and constraints. Continue to iterate this way until conclusions are reached.

Question the confident answer

When AI gives you a definitive response, probe further. Ask for alternative approaches, edge cases, or reasons why the conventional wisdom might not apply. The most valuable insights often emerge from this second-level questioning. Challenge AI to critique its own responses before accepting them.

Use AI to stress-test human intuition

If you have a strong instinct about a decision, use AI to explore the opposing view. What data supports a different approach? What are the risks you might be overlooking? This helps validate genuine insight while catching blind spots.

Combine AI research with human synthesis

Let AI do the heavy lifting of information gathering and initial analysis but reserve the final synthesis and decision-making for human judgment. AI can tell you what usually works; humans can determine what should work in this specific situation. There is no substitute for human wisdom and experience.

Iterate between AI and human reasoning

Rather than treating AI as an oracle, use it as a thinking partner. Present your reasoning to AI, get its perspective, refine your thinking, and repeat. This iterative process often produces better outcomes than either approach alone.

Practical Guidelines for Professionals

For consultants: Use AI to rapidly understand new industries and gather initial insights, but don’t present AI-generated frameworks as final recommendations. Your value lies in adapting those insights to client-specific contexts and stakeholder dynamics.

For researchers: AI excels at literature reviews and identifying patterns across studies, but human insight is crucial for identifying gaps, questioning assumptions, and designing methodologies that probe beyond established knowledge.

For business leaders: AI can quickly analyze market data and competitive landscapes, but strategic decisions require human judgment about timing, organizational capabilities, and stakeholder reactions that may not be captured in historical data.

The Wisdom to Know the Difference

The most effective AI users develop a meta-skill: knowing when to trust AI insights and when to rely on human judgment.

This requires understanding not just what AI can do, but how it does it—and where its probabilistic nature serves you well versus where it becomes a limitation.

The future of knowledge work isn’t about humans versus AI, or even humans using AI as a tool. It’s about creating a new form of hybrid thinking that leverages AI’s pattern recognition and synthesis capabilities while preserving space for human wisdom, intuition, and contextual judgment.

The most probable answer is often good enough – but don’t conflate what’s merely probable with what’s optimal or best.

When it matters most—when you’re trying to solve novel problems, navigate complex stakeholder situations, innovate, or make decisions with significant consequences—the best answer usually requires the kind of thinking that no algorithm can replicate.

The competitive advantage will belong to those who master this distinction.