June 3, 2025

Abstract

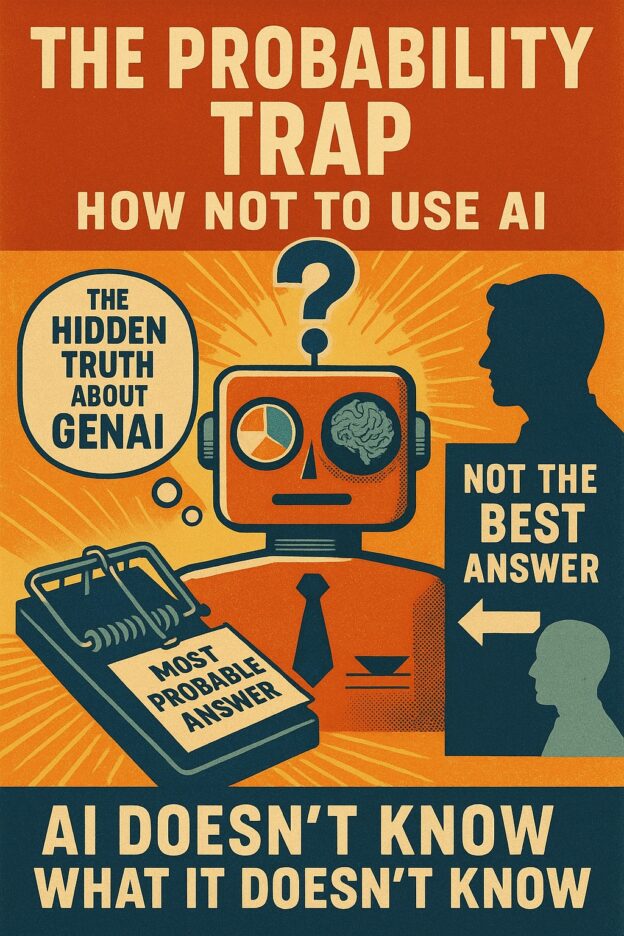

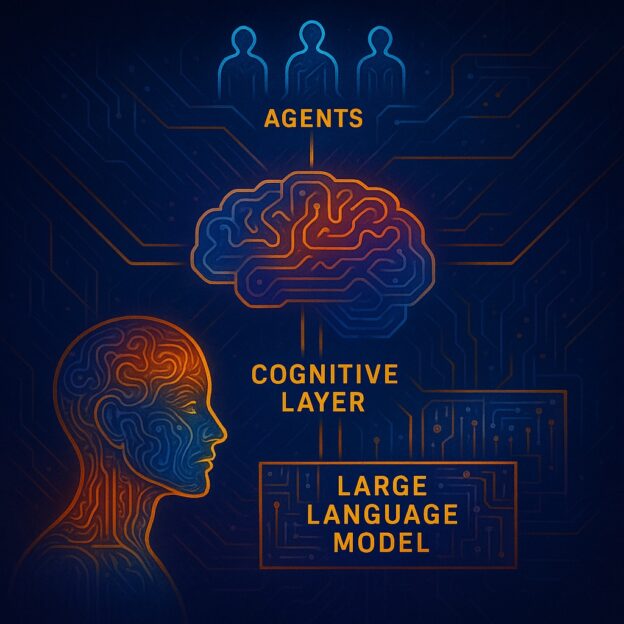

As artificial intelligence, especially large language models (LLMs), becomes increasingly embedded within critical societal functions, understanding and managing their epistemic capabilities and limitations becomes paramount. This paper provides a rigorous and comprehensive epistemological framework for analyzing AI-generated knowledge, explicitly defining and categorizing structural, operational, and emergent knowledge limitations inherent in contemporary AI models.… Read More “Epistemology and Metacognition in Artificial Intelligence: Defining, Classifying, and Governing the Limits of AI Knowledge”