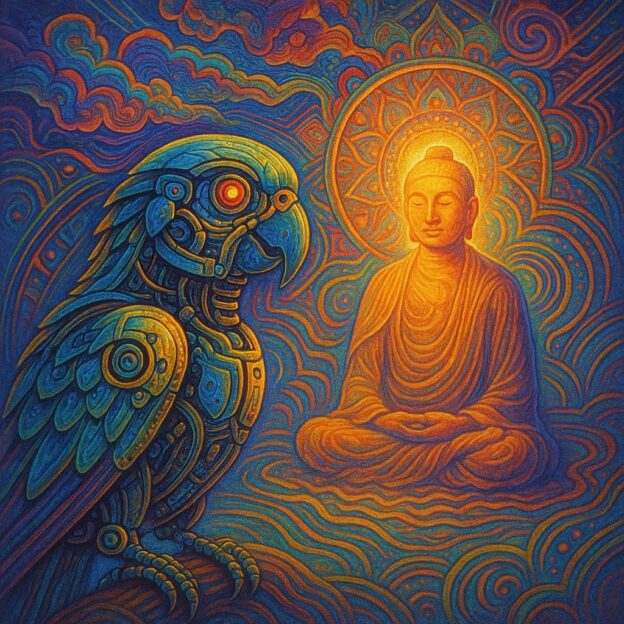

The Dream of an Artificial Zen Master:

If we make an artificial Zen master,

And it perfectly reproduces everything

that a real Zen master can say or do,

Is it a Zen master?

My commentary:

Not necessarily. To be an actual Zen master it would have to be sentient.… Read More “Do Artificial Zen Masters Dream of Electric Butterflies?”